Back in the ’90s and very early 2000s, a whole market segment of computers existed that we don’t really talk about anymore today: the UNIX workstation. They were non-x86 machines running one of the many commercial UNIX variants, and were used for the very high end of computing. They were expensive, unique, different, and quite often incredibly overengineered.

Countless companies made and sold these UNIX workstation. SGI was a big player in this market, with their fancy, colourful machines with MIPS processors running IRIX. There was also Sun Microsystems (and Oracle in the tail end), selling ever more powerful UltraSPARC workstations running Solaris. Industry legend DEC sold Alpha machines running Digital UNIX (later renamed to Tru64 UNIX when DEC was acquired by Compaq in 1998). IBM of course also sold UNIX workstations, powered by their PowerPC architecture and AIX operating system.

As x86 became ever more powerful and versatile, and with the rise of Linux as a capable UNIX replacement and the adoption of the NT-based versions of Windows, the days of the UNIX workstations were numbered. A few years into the new millennium, virtually all traditional UNIX vendors had ended production of their workstations and in some cases even their associated architectures, with a lacklustre collective effort to move over to Intel’s Itanium – which didn’t exactly go anywhere and is now nothing more than a sour footnote in computing history.

Approaching roughly 2010, all the UNIX workstations had disappeared. Development of MIPS, UltraSPARC (for workstations), Alpha, and others had all been wound down, and with a few exceptions, the various commercial UNIX variants started to languish in extended support purgatory, and by now, they’re all pretty much dead (save for Solaris). Users and industries moved on to x86 on the hardware side, and Linux, Windows, and in some cases, Mac OS X on the software side.

I’ve always been fascinated by these UNIX workstations. They were this mysterious, unique computers running software that was entirely alien to me, and they were impossibly expensive. Over the years, I’ve owned exactly one of these machines – a Sun Ultra 5 running Solaris 9 – and I remember enjoying that little machine greatly. I was a student living in a tiny apartment with not much money to spare, but back in those days, you couldn’t load a single page on an online auction website without stumbling over piles of Ultra 5s and other UNIX workstations, so they were cheap and plentiful.

Even as my financial situation improved and money wasn’t short anymore, my apartment was still far too small to buy even more computers, especially since UNIX workstations tended to be big and noisy. Fast forward to the 2020s, however, and everything’s changed. My house has plenty of space, and I even have my own dedicated office for work and computer nonsense, so I’ve got more than enough room to indulge and buy UNIX workstations. It was time to get back in the saddle.

But soon I realised times had changed.

Over the past few years, I have come to learn that If you want to get into buying, using, and learning from UNIX workstations today, you’ll run into various problems which can roughly be filed into three main categories: hardware availability, operating system availability, and third party software availability. I’ll walk through all three of these and give some examples that I’ve encountered, most of them based on the purchase of a UNIX workstation from a vendor I haven’t mentioned yet: Hewlett Packard.

Hardware availability: a tulip for a house

The first place most people would go to in order to buy a classic UNIX workstation is eBay. Everyone’s favourite auction site and online marketplace is filled with all kinds of UNIX workstations, from the ’80s all the way up to the final machines from the early 2000s. You’ll soon notice, however, that pricing seems to have gone absolutely – pardon my Gaelic – absolutely batshit insane.

Are you interested in a Sun Ultra 45, from 2005, without any warranty and excluding shipping? That’ll be anywhere from €1500 to €2500. Or are you more into SGI, and looking to buy a a 175 Mhz Indigo 2 from the mid-’90s? Better pony up at least €1250. Something as underpowered as a Sun Ultra 10 from 1998 will run for anything between €700 and €1300. Getting something more powerful like an SGI Fuel? Forget about it.

Going to refurbishers won’t help you much either. Just these past few days I was in contact with a refurbisher here in Sweden who is charging over €4000 for a Sun Ultra 45. For a US perspective, a refurbisher like UNIX HQ, for instance, has quite a decent selection of machines, but be ready to shell out $2000 for an IBM IntelliStation POWER 285 running AIX, $1300 for a Sun Blade 2500, or $2000-$2500 for an SGI Fuel, to list just a few.

Of course, these prices are without shipping or possible customs fees. It will come as no surprise that shipping these machines is expensive. Shipping a UNIX workstation from the US – where supply is relatively ample – to Europe often costs more than the computer itself, easily doubling your total costs. On top of that, there’s the crapshoot lottery of customs fees, which, depending on the customs official’s mood, can really be just about anything.

I honestly have no idea why pricing has skyrockted as much as it has. Machines like these were far, far cheaper only 5-10 years ago, but it seems something happened that pushed them up – quite a few of them are definitely not rare, so I doubt rarity is the cause. Demand can’t exactly be high either, so I doubt there’s so many people buying these that they’re forcing the price to go up. I do have a few theories, such as some machines being absolutely required in some specific niche somewhere and sellers just sitting on them until one breaks and must be replaced, whatever the cost, but nothing concrete.

In the end, though, the right price is whatever people are willing to pay, but I have a feeling we’re looking at some serious tulipmania here. UNIX workstations are pretty much scrap metal, and have no real use other than for enthusiasts and the odd collector here and there. I’m saddened there are, apparently, enough of us who are ready to pay through the nose for what are essentially loud paperweights with power cords.

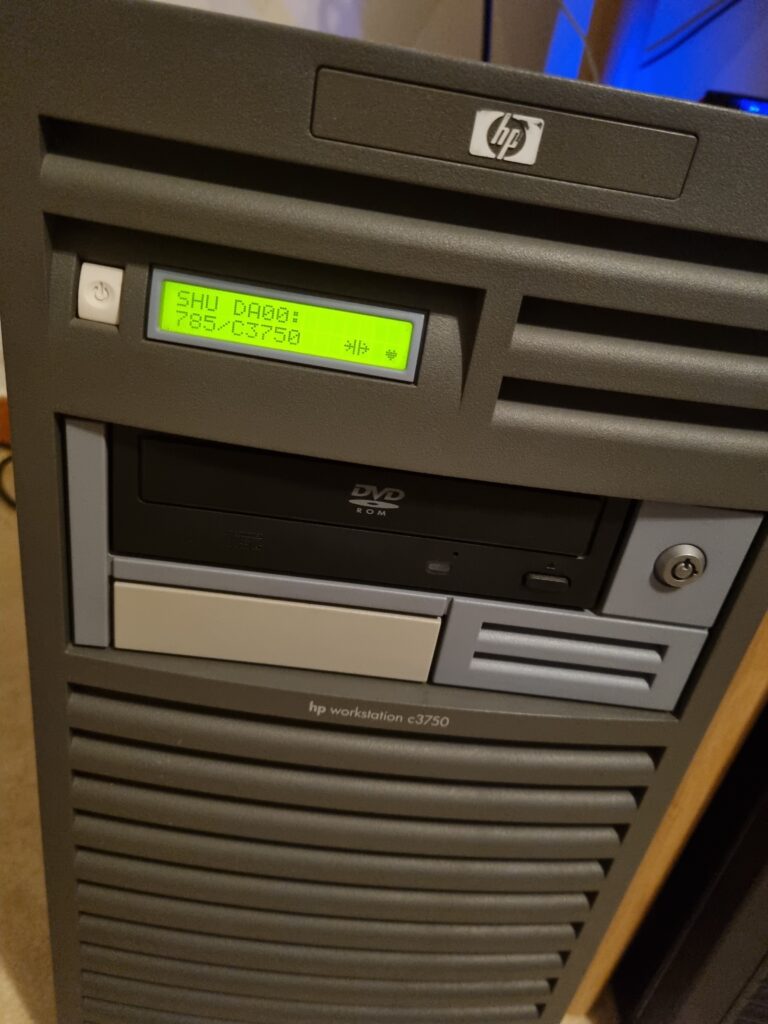

In the end, I got insanely lucky on eBay, and in April 2021 I managed to score an HP Visualize c3750 PA-RISC workstation for a mere €70 – a price I’m still not entirely sure wasn’t a mistake. The professional eBay seller I bought it from also seemed to have realised he made some mistake, because it took a lot of back and forth and pressure to get him to actually ship the computer.

After he finally shipped it and it arrived on my doorstep – I apologised to the mailwoman profusely since she had to lug that thing around – and I was happy to finally have my hands on this originally expensive piece of hardware.

And then things got worse.

Operating system availability: a pirate’s life for me

The c3750 I bought was entirely designed to run HP-UX, Hewlett Packard’s commercial UNIX variant. Sadly, my machine shipped without the software, either installed or on plastic, so I had to somehow get my hands on the installation media myself. This is when I discovered just how hard it is to get your hands on some of the commercial UNIX variants.

In my naiveté, I just assumed I’d be able to go to the HP or HPE – HP’s enterprise arm they spun of a few years ago – website, enter my model number or perhaps serial number, and gain access to downloadable installation media, or at least an option to perhaps order the media for a small fee and have them shipped to my house. These were expensive enterprise machines, after all, and if there’s one thing companies like HP are good at, it’s supporting expensive enterprise stuff for a long time.

I was wrong.

First of all, there is no way to download HP-UX 10.20, 11.00 or 11i v1 (also known as 11.11), the versions of HP-UX supported on my PA-RISC machine. On top of that, there is also no way to order installation media. In fact, there’s no official way of procuring these versions of HP-UX in any way, shape, of form. I even contacted HPE specifically about this, but the communication petered out very prematurely.

So, I had no choice but to explore less… Legal means of getting HP-UX. Archive.org was the obvious choice, but none of the 11.11 versions I found there seemed to work. I also tried various other avenues, but to no avail. Every few weeks or months I would resume my search, but I never got any closer to a version of HP-UX installation media capable of booting and installing on my hardware.

Almost 18 months after having originally purchased the machine, I resorted to asking my new followers on Mastodon – thanks emerald boy, I guess? – and the response was honestly overwhelming. I talked to everyone from fellow enthusiasts who were digging through their CD/ISO piles for me, to former system administrators experienced with HP-UX, all the way to former HP engineers who actually built and tested machines like mine back in the day.

They were incredibly helpful, and I’m very grateful for all their help, advice, stories, and tips. Some people even went so far as to grant me access to HP-UX ISOs they had stored on various private cloud services so I could try those out. After a lot of trying and back-and-forths, in some dark corner of the web, I found some ISOs that turned out to actually work. I finally had working installation media.

And then things got worse.

My installation media were dated 2003 or 2004, but from my 18 months of researching up on HP-UX 11.11’s lifecycle, I knew there was a massive amount of patches I could apply to fix bugs, plug security holes, update certain open source tools, and so on. These patches were distributed in various forms over the years, usually on CD-ROMs and later DVDs. These were even harder to find than HP-UX itself.

Much like with the operating system, HPE does not seem to list these patches anywhere, and there’s pretty much no official documentation available. You have to do a lot of searching, digging, and collating from unofficial sources to get a vague grasp of what you need or what’s applicable, and eventually I think I managed to get some grip on what I needed to look for. Much like with HP-UX itself, I eventually managed to find the right Support Plus and Hardware Enablement ISOs through a combination of Archive.org and spelunking through the private cloud collections I was given access to.

There was once a tool to download patches automatically from HP’s servers, HP-UX Software Assistant (SWA), but I have no idea if it supported HP-UX 11.11. Not that it matters – the tool is nowhere to be found, there’s no official documentation, and as far as I can gather, even if you were to find it and use it, HPE no longer serves the patches online anyway, so you wouldn’t be able to use it. It’s just another example of a major computer and software company not taking care of its heritage.

Regardless, as far as I have determined through my research, my HP Visualize c3750 – with its fancy 875MHz PA-8700+ PA-RISC processor and upgraded to 3GB of RAM and fitted with the fastest GPU I could find at the time, the FX10pro – now runs the most up-to-date version of HP-UX 11i V1 (11.11), the last version this machine supports, with all the latest patches. No thanks to the company that actually made the hardware and the software.

And then things got worse.

Third party software availability: black hole

Once I had the hardware, the operating system, and all of its various patches, it was time to find applications and programs to actually run on it. Working my way up from the bottom, I figured the I’d start getting some of the base open source tools we’ve come to expect from any UNIX-like system. Luckily, I had already discovered the HP-UX Porting and Archive Centre, which is set up and maintained to do just that – to make public domain, freeware and open source software more readily available to users of Hewlett Packard UNIX systems.

Sadly, it turns out that the Centre simply does not have the means to support anything but the latest version of HP-UX – in this case, HP-UX 11i v3, running exclusively on Itanium 2. Any packages for older versions and architectures are either deleted, deprecated, or only downloadable manually – you can’t use their handy dependency resolver and downloader script either. I fully understand this – the HP-UX community is probably quite small, and building, maintaining, and serving these packages isn’t free. I’m already thankful the Centre exists in the first place.

The next step up would be actual applications, the kind of software everyday users would use back in the day when these machines were new. Here things really started to get frustrating. I’m not going to detail every application I tried to get working, and instead focus on two different ones that highlight the issues you run into very well: Pro/ENGINEER and SoftWindows.

Pro/ENGINEER is a CAD software suite that was available on a variety of platforms, including HP-UX. I have no experience with nor understanding of CAD and industrial design software, but I wanted to get it running just to get an idea of what such software would look like when my machine was new. Finding a copy of Pro/ENGINEER for UNIX isn’t hard – several versions are available on Archive.org, including version 17, which I think might have been the last version available for HP-UX (but I’m not sure, since information is scant).

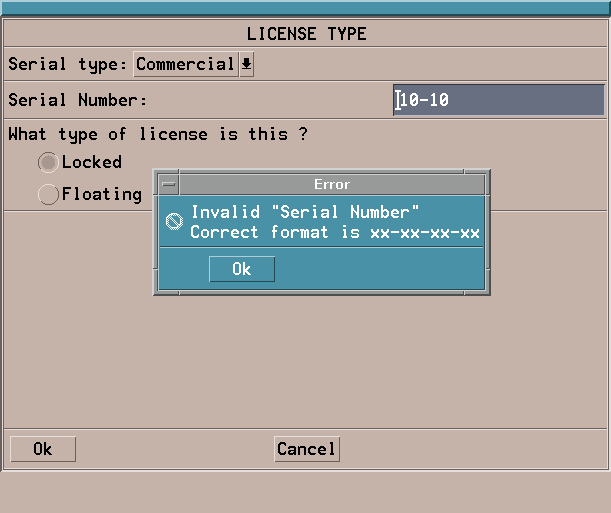

Installing Pro/ENGINEER 17 from the version uploaded to Archive.org is a breeze – it’s technically for an older version of HP-UX, but in my use of Hewlett Packard’s UNIX I’ve learnt it has excellent backwards compatibility, and any software that I’ve tried for versions 9.x and 10.x also works just fine on 11i. Once you hit Pro/ENGINEER’s licensing step, though, you hit a brick wall. Software like this doesn’t use simple serial keys, but instead uses more complex licensing schemes and software. Of course, these licensing schemes have long since expired, there’s no store that still sells them, and even the “demo” option apparently had an end date and stopped working a long time ago.

The company behind Pro/ENGINEER, PTC, still exists and sells a variety of professional CAD-related software packages, so I decided to contact them to see if we could work something out, such as maybe making a universal working license available for these older versions that anybody could use. I got a reply from a sales rep interested in selling me the current version, and after restating my question in a follow-up, communication went dead.

Another piece of software I was incredibly eager to try was something called SoftWindows. SoftWindows was a software package that combined the SoftPC x86 emulator with a copy of Windows, allowing you to run software for Windows 3.x, 95, and 98 on any of the supported platforms, including the carious commercial UNIX variants. The company behind SoftPC and SoftWindows, Insignia, even entered into an exclusive agreement with Microsoft whereby they gained access to the Windows source code to further improve their software offering, and Microsoft made use of SoftPC – or, at least, parts of it – for the x86 compatibility layers in the various early non-x86 versions of Windows NT.

The first problem I ran into was that I could not find the version of SoftWindows for HP-UX that came with Windows 95, which I think is the last version they made for the platform. I also tried to find SoftPC itself, but that proved futile as well. This Windows 95 version definitely existed – there’s detailed documentation everywhere – but the software itself seems to have disappeared. This was a bummer, and further illustrates the need for software preservation efforts.

Luckily, an older version of SoftWindows for UNIX can be found on Archive.org – SoftWindows 2.0 for UNIX. This version is a bit older, and includes Windows 3.11 and MS-DOS 6.22, and can be installed on a variety of UNIX variants, including HP-UX. Even though this is technically designed for an older version of HP-UX, it, too, installs without issue on HP-UX 11i. However, the keen-eyed among you may have noticed an ominous line in the Archive.org listing: “It uses FLEXlm so yeah good luck”.

FLEXlm is what is known as a software license manager, and was used by various software packages for UNIX (and other platforms) to manage their licenses. Licenses could be locked to a single computer, or distributed through a license server and allocated as-needed. In the case of SoftWindows 2.0 for UNIX, the Insignia FLEXlm License Manager window pops up at the end of the installation and whenever you start SoftWindows, asking for a serial number, an expiry date, and an authorisation code. In order to get the authorisation code as a single user, you had to complete your warranty card, add your serial number, FLEXlm HostID (a unique identifier tied to your machine), and server name, and fax it to Insignia, who would then provide you with the authorisation code.

It will come as no surprise that this no longer works. Not only do I have no clue how to send a fax, the listed phone numbers are from the ’90s and are no longer useful because Insignia no longer exists; it was acquired by a company called FWB Software in 1999, which seems to have ceased operations somewhere in 2002 or 2003 (although I can’t find any definitive dates). FLEXlm, the license manager, went through a few different owners and ended up at Macrovision, who eventually spun FLEXlm and related software off as Flexera, where it still resides today.

This most likely means that even if I somehow managed to find the person who holds the rights to SoftPC and SoftWindows – either the original people from Insignia or the now defunct FWB Software – they probably wouldn’t be able to even generate licenses anyway because they can’t run the original licensing software used to generate said licenses. Flexera, meanwhile, probably wouldn’t be able to generate any SoftWindows licenses either, since even if they managed to run the original licensing software to generate a valid license, they don’t own the rights to do so.

In other words, this incredibly interesting piece of software is stuck in limbo.

Pro/ENGINEER and SoftWindows are just two examples, but you’ll run into similar problems with countless other commercial software packages; they’re either impossible to find or impossible to license, or both. This is an absolute shame, since there’s a ton of fascinating pieces of commercial software for these UNIX workstations that played a huge role in all kinds of industries, from animation to industrial engineering, that have effectively disappeared into a black hole.

There are other problems you run into when trying to explore the capabilities of HP-UX, too. The lack of accessible information from HP is always a massive problem. For instance, finding a version of Firefox that runs on HP-UX 11.11 was difficult – I still don’t know which version was the last version ever made, and it took me a lot of spelunking through dodgy FTP servers and god knows where else to eventually stumble upon an HP-UX depot (the platform’s package format) of Firefox 3.5.9. It installs and runs flawlessly, but unsurprisingly, has fallen victim to the TLS apocalypse, and I have no idea if any newer versions were ever made available by HP.

Worse yet are the cases where I know HP-UX has some amazing cool ability, but due to the lack of documentation, information, and software from HP, I have simply no idea how to set that ability up or let alone use it. For instance, there’s a lot of references to HP-UX being able to use smartcard readers and even fingerprint scanners for authentication, for instance to log in, but what readers and scanners are supported? How do you set this up? What HP software do I potentially need? Who knows! HP-UX can also be used to manage and run thin clients, but which models, exactly, and how does it all work? Who knows!

At this point I doubt even HP knows. I want to buy a fingerprint scanner or smartcard reader that’s compatible with HP-UX so I can do silly things like logging in with a smartcard. I want to buy a HP-UX-compatible thin client and set it up so I can access the machine from across the house. I know HP-UX can do all these things, but getting the right software, documentation, hardware compatibility lists, and more is incredibly frustrating and hard.

Mass extinction

This whole thing has been an unpleasant experience. It has left me bitter and frustrated that so much knowledge – in the form of documentation, software, tutorials, drivers, and so on – is disappearing before our very eyes. Shortsightedness and disinterest in their own heritage by corporations, big and small, is destroying entire swaths of software, and as more years pass by, it will get ever harder to get any of these things back up and running.

What would I want HPE to do? Not much – I’m not asking for the world here. I’m not asking them to release HP-UX as open source – never going to happen – or to set up an entire sales channel just for the few enthusiasts out there interested in HP-UX. All they need to do is dump some ISO files, patch depots, and other HP-UX software, alongside documentation, on an FTP server somewhere so we can download it. That’s it. This stuff has no commercial value, they’re not losing any sales, and it will barely affect their bottom line. If they want to get fancy about, they can even set up a website to make it easier to find stuff, but that’s not necessary. And if they really want to gain some goodwill, they dump all this stuff on Archive.org.

And would it kill them to perhaps send some financial support to the the HP-UX Porting and Archive Centre?

As for all the third-party software – well, I’m afraid it’s too late for that already. Chasing down the rightsholders is already an incredibly difficult task, and even if you do find them, they are probably not interested in helping you, and even if by some miracle they are, they most likely no longer even have the ability to generate the required licenses or release versions with the licensing ripped out. Stuff like Pro/ENGINEER and SoftWindows for UNIX are most likely gone forever.

There’s this idea that as long as someone still thinks of you, if you’re still in someone’s thoughts, you are effectively immortal. People like Genghis Khan, Julius Caesar, and that one pope who exhumed his predecessor to put his corpse on trial are immortal because we still think about them, we still write about them. This doesn’t apply to software – we can think about software, write about software all we want, but if we can no longer run it, it’s dead. It’s gone.

Software is dying off at an alarming rate, and I fear there’s no turning the tide of this mass extinction.

Addendum: I fell in love with HP-UX

Originally, my intention was to review HP-UX – write about my experiences using the platform in 2022 on one of the last UNIX workstations HP ever made. Sadly, as detailed in this article, I ran into so many issues with trying to use actual software that a review simply didn’t make any sense. That being said, I do have a few things to say about this UNIX platform, and that’s what this addendum is for.

First and foremost, my biggest surprise is just how well HP-UX and the CDE desktop perform on this hardware. It’s a mere 875Mhz, but it feels much faster than that. Applications load almost instantly, and more complex software only seems to be held back by the mechanical SCSI hard drive instead of the processor or RAM. Even Firefox loads faster than you’d expect, and once loaded, web pages load acceptably.

I also really enjoyed configuring the system. HP-UX’ SAM system management tools are quite intuitive, easy to use, and very comprehensive. And even when you do have to dive into manually editing configuration files, they seem to reside in logical, consistent places, and are easy to read and edit. I’m by no means a UNIX (or even Linux) expert, but I could easily find my way around the operating system, and despite its reputation as the ugly duckling of the UNIX world, HP-UX endeared itself to me, which made the problems with finding software and documentation all the more frustrating.

I’m now seriously considering trying to get my hands on an HP Itanium 2 workstation, the HP zx6000, just so I can move up to HP-UX 11i v2, experience better performance, and perhaps get more software to run. I feel like I’m going down the rabbit hole now, and there’s no way back.

In the spirit of last week’s hype, here’s a poem by GPT Chat about how FLEXlm should rot in hell:

“FLEXlm, oh FLEXlm,

With your endless misery and pain,

You make our lives a living hell,

As we struggle with your endless restraints.

Your licensing rules are a labyrinth,

A twisted maze that we must navigate,

With each turn bringing more frustration,

And each step bringing more anger and hate.

But in the end, we persevere,

For we are strong and we are smart,

We will find a way to overcome,

And leave you in the dust, you heartless piece of art.

So rot in hell, FLEXlm,

In the fiery depths where you belong,

We will rise above your tyranny,

And sing a joyful victory song.”

Not a workstation per se, but there’s a T1000 in the corner of my lab with my name on it. It’s already tainted with GCC from Blastwave because of the above though 😉

NetBSD lists this machine as supported in 32bit mode.

Might be worth trying it to breathe some life into the old girl.

http://wiki.netbsd.org/ports/hppa/

I still have an Apollo 715/50 with 256 MB of ram that works. Would be glad to assist with testing. (I can’t code for crap though)

Same for OpenBSD as well. 🙂

https://www.openbsd.org/hppa.html

NetBSD/hppa supposedly runs on the C3750 if you want to try it out.

http://wiki.netbsd.org/ports/hppa/

I own a 712 that i run nextstep on (yes, nextstep for pa-risc is a thing), a b2600 that runs linux, and a bigass 16 socket itanium 2 RX8640 that runs gentoo, although i never run the RX anymore due to electricity prices being what they are in the EU right now.

I used to have a bunch of these machines, like old C8000s. I gave all of them away pretty much.

You might like your experience with HP UX for what you are doing, but it’s pretty horrible to administer in a professional setting tbh. Things like stale device nodes, the weird 32/64 bit memory management things (it got mostly fixed in 11.30 onwards), the weird little tools to administer different aspects of the system like storage, networking, etc … ifconfig vs lanscan, ioscan and so on.

It had cool tech too like ignite backups, the way they implemented LVM, but then VXFS was a thing too … meh

ksh88!!! Seriously.

If you think IA64 will give you better performance, think again.

I worked at HP during the HPPA to IA64 transition days. I used to work on migrations. IIRC Aries was offered as a way to run HPPA binaries on IA64. It was pretty awful.

When native IA64 binaries were becoming a bit more mainstream, performance didn’t pick up as expected.

With IA64 , the compiler played a huge rule in the quality of the binaries, but most workloads just weren’t a good match for IA64’s EPIC in-order approach.

I remember customers being pretty pissed when moving from the old superdomes to the newer integrity stuff. It just sucked lol.

I don’t know the state of linux on HPPA these days, but it used to be quite good. Running linux on one of these just makes them infinitely more usable and better overall.

I understand that you want to explore the old unix lands, but … sometimes progress is just that. Progress.

You can have your cake and eat it too. That is to say, the cool and quirky hardware, but also a sane operating system.

I always had the impression, that IA64 won on premise alone, its spectre, looming over everyone else in that space so heavily they all agreed to give up – long before it properly hit market. Only really HP really bought into it – but Intel didn’t care by then, and not at all, after amd64. I’d be curious if you’d disagree with that.

I liked running linux on two risc platforms – sparc & alpha – they both ran well, at the time – you’re certainly right they made working with the software all built for them, better than increasingly curmudgeonly, and – abandonware – unix (r)’s. I think only Solaris really kept up, at least for a while.

As for progress, I’ve noticed recently, a thing changing that I’d always taken for granted – how even different cpu architectures on open source packages /on linux/ are not well supported (I’m thinking of ppcle issues, but true on modern power, and arm) ; let alone between OS providng not grossly dissimilar interfaces, say Haiku. I can’t blame anyone, but it feels like as a concept, portability has been being lost.

IA64 is a long and complicated story.

The x86 (and HPPA) legacy hurt it in many ways. Just like with ARM today, the windows crowd really wanted x86 backwards compatibility, and this was added to itanium in silicon. The performance of this was atrocious. I mean … really really bad. Windows being windows, of course, a lot of what ran on windows servers was x86. Porting efforts were slow, or non-existent for a lot of software. This quickly got IA64 the reputation of being very slow.

On the (HP) unix side of things, things weren’t all that different initially. The need for HPPA binary compatibility did influence the IA64 core designs as well, but i don’t have too much info on this. It wasn’t like the x86 compatibility mode where an itanium chip would actually run the x86 code.

As i understand it, it’s more like the M1 has facilities to handle memory management and such differently with the flip of a bit in a register somewhere. In any case, it was also awful. It worked fine, but the performance was … shit.

So IA64 also quickly got a bad rep in the unix world.

HP pressured intel into including these things in the first place. And it wasn’t working out.

HP faced a lot of backlash from their customers (and rightly so).

Then there was the big problem with the compilers …

With itanium, everything is in order execution. The emphasis was on parallelism Explicitly Parallel Instruction Computing, EPIC.

A lot of the x86 bullshit gone. Fixed length instructions. You knew where the instruction was, etc. Good.

The bad is that you needed to order and pack these instructions into bundles, take care of the dependencies, etc in software in order to make this thing perform.

And that just never happened. Intel’s own compiler was the best one to use by far, but … it just wasn’t enough. The compiler couldn’t just magically fix everything.

IA64 would just stall all the time and performance just sucked.

When x86 got extended with amd64, HP dropped intel like a sack of potatoes.

They were even going to port HP-UX to amd64, which of course never happened. They wanted to get rid of IA64 and just forget about the whole ordeal, but it wasn’t that easy …

VMS had already been ported to IA64, and HPUX never got ported to amd64, so intel had to keep selling and improving IA64 (they just shipped the last units last year), even with HP having betrayed them.

It’s hard to feel sorry for a company like intel, but they really did get shafted on this one.

It’s not the first time intel tried to move away from x86 either. i960 comes to mind, as well as Xscale.

I don’t think they are too keen to work on anything besides x86 anymore. Their previous attempts failed.

Although it is good to see them move into the GPU market with ARC.

p13,

It could have been really cool to program for and I really wanted one. But there’s no denying IA64 suffered from a major chicken and egg problem. Very little native software was published for IA64 and using it to run x86 software was insanely inefficient.

I agree. Intel was further hamstrung when AMD breathed new life into x86. Part of me actually wishes they hadn’t because it continued the dominance of x86, which had already grown long in the tooth. But AMD64 ended up being very strong simply due to a high degree of backwards compatibility and mountains of pre-exisiting software, which for better or worse often determines the winners.

You hit the nail on the head. It put a new emphasis on explicit parallelism, which is fine, but the industry wasn’t ready for it. I actually think they were ahead of their time. It would make a far more interesting competitor for cuda-like applications, NOT the traditional applications and databases I suspect enterprises were buying them for. I have a feeling very few were using IA64’s real potential . To make matters worse it was completely out of reach of consumers and particularly gamers who largely propelled GPUs to mass adoption.

Intel did have some good ideas, but they never overcame the chicken and egg problem, and they priced themselves out of reach of the developers who could have helped. 🙁

Yeah, I think they’ve learned that they have very little control over the software ecosystem (unlike apple, by contrast).

It is good. People have been very quick to criticize ARC next to AMD and nvidia products, but it’s early still. I hope intel is able to continue evolving ARC into a competitive alternative.

Basically. They didn’t realize they needed to flood the market with cheap chips to build a base, like they did in the ’80s. They flooded the market with cheap embedded chips and people started building things with them.

Intel probably could move to a new base these days. There is enough experience with emulators to make it fairly seamless these days.

Switching to x86 only was a conscience decision on Intel’s part. They got too wrapped up in reading their own marketing. They forgot the were a fab company, and started believing they’re were a technology firm.

People had unreasonable expectations, and Intel hyped the thing for 2 years which didn’t help.

So, they got shafted in their attempt to kill competition in the dominant architecture, and thus to shaft us all.

Yeah, you’re right. It’s impossible to feel sorry for them.

“it feels like as a concept, portability has been being lost”

It is my impression that where previously “portability” was the responsibility of the application, it has largely become a feature of the operating system with everybody but Windows being expected to support POSIX ( and even Windows largely one could argue ). Even POSIX is rapidly morphing into the Linux API.

One benefit of this of course is that anything that does implement POSIX inherits a fairly substantial base of applications ( historically the biggest killer of alternative operating systems ). The downside is that every operating system seems more and more like a thin wrapper around POSIX and the maybe even the most popular Linux GUI toolkits.

Portability is largely a feature of the programming language, and it’s been built into the language tooling and libraries. It’s been abstracted. We’ve learned a few things over the years. 🙂

The hardware is abstracted by the OS, and the OS is abstracted by the programming language tooling and libraries.

There needs to be hardware and software diversity for portability to work or be worthwhile.

The NetBSD and OpenBSD teams put a lot of effort to maintain their hardware garden, and even they have to retire ports sometimes when it’s not feasible to maintain the hardware anymore.

Companies just aren’t sinking money into weird, homegrown ISAs in an attempt to lock people onto their platforms anymore (Outside of Nvidia and their GPUs).

One minor correction: IA64 didn’t kill the RISC workstation market. Sure, it nearly did due to the looming spectre effect you mention, but eventually didn’t because Sun, SGI etc resumed their investment on their RISC architectures once they realised that IA64 wasn’t the steamroller everyone thought it to be. x86-64 is what really killed the RISC workstation market. It’s no coincidence that the UNIXes that are still with us to this day (Solaris, MacOS X) made a transition to x86-64 and sold lots of x86-64 boxes. Simply put, there is no way all those RISC workstation vendors could compete with AMD (and later Intel) on sales volume (and hence R&D money) no matter how many workstations they sold.

An interesting historical detail is that macOS also sold x86-32 boxes, which boggles the mind because it was literally the last year intel made x86-32 CPUs (they moved to the 64-bit Core2 Duo the year after). Couldn’t Apple wait one more year before announcing the transition? It’s the reason Photoshop CS6 won’t run on modern versions of MacOS X/11 (it was released as 32-bit to be compatible with the 32-bit Macs sold during that one year).

I played with Irix, HP-UX, Tru64 (as it was then), early-late 2000’s. They were already dead, or fading, otherwise how could I afford them – but I really enjoyed these systems, and they were well enough supported at least then to still be working tools, and especially software targets.

All a platform really needed was firefox, or mozilla, as it’d have been initially – and if that could build, it probably had a good set of GNU software – thanks in part to efforts of, sunfreeware, blastwave – or HP P&AC.

They seem like the last vestiges of the altruistic web now.

And the platforms could still do things that seemed like sci fi – sun ray thin client on a 167mhz server, and playing video (realplayer, at that) – including, futurama.

I guess it was useful learning, as lately in part I support commercial remote desktops, except only in the most mundane way possible.

I wish they ran as well.

Asides from the thought association with what many of these systems were most used for – it drives home how much i miss not having such an insipid monoculture.

SPICE works really well as a remote desktop protocol, but that’s mostly for a VDI setting. Video, audio, etc, not a problem. Desktops run at a much, much higher res too nowadays, so there is that to keep in mind.

I used to run an inidigo2 impact r10k as a desktop for a long time. I ran IRIX on it of course. Linux on these systems only got fixed somewhat recently by Rene (of T2 and PS3 linux fame),

It worked, and the hardware was genuinely different, but at the end of the day … generic x86 hardware with linux on it was just better in pretty much every single way.

Nowadays, i just want something that works. I do still own and play around with a lot of these exotic machines, but i’m more interested in the challenge of porting things, and just keeping them alive. They are not really useful in any way. Sure, they are computers, and sure, many of them were “ahead of their time”, but really … even a raspberry pi runs circles around a lot of this stuff.

It’s funny in a way. I’ve had to deal with solaris, HPUX, AIX, etc a lot in the day in a professional capacity.

Man … it just sucked. Some more than others. HPUX is still around here and there. AIX sure still is.

I’m sorry, but i’m not actually sorry. There’s a reason this has all faded into obsolescence.

The hardware was very expensive, licensing was very restrictive. Just expensive overall.

Linux on x86 took over, and for good reason. All of these companies that used to run UNIX on proprietary hardware pretty much all moved to linux. You can run it on cheap commodity hardware, virtualize it easily, run it in the cloud, on your laptop, PC, etc. It’s free, it’s open source, performance is great, reliability is great, etc.

The only sad part is that we are still stuck on x86. An ISA where the branch prediction, decoder and just general OoO stuff takes up more transistors than the whole processor of these older archs (except for the cache of course).

Once more sane architectures make it into the mainstream, with a hopefully open platform, whatever that ends up being … u-boot, EFI, petitboot, coreboot, whatever … then one of the last long standing problems with general computing will be solved … at least IMHO.

I’m also a big fan of Haiku as a more desktop centric approach to computing. I really hope it gains more momentum, but linux to me is pretty damn great as a general purpose OS.

I don’t miss slowaris, and i certainly don’t miss HP-sUX.

” still stuck on x86. An ISA where the branch prediction, decoder and just general OoO stuff takes up more transistors than the whole processor of these older archs”

That has little to do with x86 and it’s more a function of the microarchitecture.

E.g. The M1 using ARM ISA, actually uses way more transistors for the predictor/decoder/register windows/ROB/etc than its x86 competitors.

It can be easier to get a sparcstation5 than a pi, nowadays, however.

cevvalkoala,

Haha, that might be true. RPI has had supply chain issues since it’s inception (even before covid, although this has made it worse).

You can find other brands of ARM SBCs (bannana & orange pi), but support isn’t as good. I’ve found the hardware itself actually works well, but one day you’ll go to update or install something new only to discover it cannot be updated and the vendor won’t be providing any new kernels. Boy I’d really like to see a day when all ARM SBC could just work as well (and long term) as x86. Having to replace working hardware due to the lack of software support is a punch to the gut. With x86 hardware, at least I know I’ll be able to build & boot whatever software I need long into the future.

Between this and the procurement issues, ARM SBCs never seem to reach their potential as a straitforward goto solution.

I want to have *zero* ties with the hardware vendor to provide software since IMHO it’s best to keep them separated. Mainline linux support would win the gold from me, but I’m not sure that any of these ARM sbcs are 100% functional with mainline kernels? There always seems to be something missing…

https://test.www.collabora.com/news-and-blog/blog/2019/03/12/quick-hack-raspberry-pi-meets-linux-kernel-mainline/

I really wish SPICE would get more support. It certainly seems like an upgrade to VNC and a good alternative to RDP.

Pretty sure some of the cracking groups have broken FlexLM. Every few years a copy of Maya for Linux ends up on torrent sites and includes a working crack. Maya uses FlexLM licensing. Guess you’ll need to dig deeper into the piracy/warez scene to get that software running. SGI IRIX is pretty nice in this sense. It has a lot of software available in repositories since the SGI fanbase is fanatical about keeping the platform alive and since SGI died noone is bothering to enforce copyright on any apps that get distributed. Nekochan is sadly gone but it lives on in mirrors.

Yes, but did any of them work on HP-UX software? Haha.

I don’t actually know the answer, but HP-UX may not have been popular in the cracking scene.

I seem to remember something about hijacking DNS and spoofing the network service being involved with FlexLM circumvention. Nothing Bind and Apache couldn’t fix. 🙂

SGI didn’t die, it was absorbed by HPE. And they are quite the douche flutes when it comes to SGI’s IP, specially IRIX stuff. Which is a shame because they are not doing anything with it.

FlexLM is well known by crackers, you should find lots of articles on it. In the fashion that virtually all devs used it, it is fairly trivial to crack around. Ie. find the one API call out to validate the license, change function to return 0 instead of 1 (or whatever the success code is). I’ve done it before.

A dev could get all fancy, encrypt the binary, hide the API calls, have multiple (even periodic) license checks etc. But virtually nobody bothered.

If one wanted to make a generator, flexlm uses a pair of (miniscule by standards now-a-days) keys. Plug into the sample code, bob’s your uncle.

Hey Thom, you’re probably getting spammed a lot since this post hit Slashdot. I have a small collection of RISC workstations I could loan you if you wanted to get some experience, and I live in Europe 🙂 Get in touch if you like.

My C8000 is my go-to machine to learn C (it dual boots HP-UX and 5.10 Linux), running 11.11 and access to a bunch of patches and all the accompanying software, including Softbench and aCC.

I also got in touch with the Archive Centre and they helped me clone the whole catalog of software built for 11.11 hppa.

I’ve owned a IntelliStation 285, that I bought new old stock from a company in the Netherlands, and I also own a SGI Octane.

The C8000 is my favourite of the bunch. It is the quietest of the UNIX workstations and extremely pleasant to use. Plus it can be booted from IDE easily, so I don’t need to worry when my SCSI disks all die. It’s also very easy to service.

I’m looking for a machine like yours to be able to run HP’s proprietary graphics APIs. The FireGL that comes with the C8000 only runs OpenGL software.

Let know if you need any help via my gmail.

RE: the cost of the machines on eBay/resellers.

You hinted at the answer in your article, the cost is driven by companies that still run these boxes hidden away in the corner doing the one critical thing that they still do. When it dies, these enterprise companies have to scramble to fix it. They are willing to pay thousands of $$ to repair/replace rather than to move the one critical thing off of it onto something modern. The cost benefit still works out.

You are a bit late to the party, most of the enthusiests would have cycled through owning workstations years ago. You can still find enthusiest mailing lists that would offer much better pricing (even to the point of free) for these systems going to other enthusiests. You are trying to buy retail that are only catering to the enterprise companies fixing their critical box that nobody left understands but drives lots of revenue still.

+1 This is a huge part of that market.

In general, the kind of person who wanted these machines out of their property has sold them long ago to some collector or has sent them to recycling. Any used machines you see now is the rare collector who is forced by life circumstances to sell or people buying from said collectors with the intention to resell.

Please try to understand this Thom: Nobody will safekeep something for you in their property for a decade so you can come and buy it when it’s convenient to you.

So, to answer the question:

One word: Safekeeping

BTW a similar story happened ~5 years ago with PS3s, PSPs and Nvidia 3D Vision equipment. You could see people selling their PS3s and PSPs with their entire game collection for a song. You could also find Alienware 3D laptops sold at regular eBay Alienware prices. Last year I checked to see how much a pair of Nvidia 3D Vision 2 glasses costs because I wanted one for my parent’s house, and whoa! prices start from 3x MSRP. I’m glad that 5 years ago I bought the glasses at MSRP and my two Alienware Kepler laptops with 3D Vision for regular eBay Alienware prices. Now the nice Kepler laptops are unobtanium and the Fermi ones are sold for half their original MSRP. It’s a collector’s item now.

A similar thing is happening with Intel Macs right now: Lots of people are unloading them on eBay not because they want to make money of them but because they don’t want them on their property anymore. 5 years from now you will see them sold on boasty eBay adds with more exclamation marks than a Hallelujah song and sold at much higher prices.

Back on topic, my favourite machines were SGIs (we had a variety of Sun Ultras and SGI O2s at the uni). I consider IRIX to be the best-lookong of the Unixes, easily a MacOS X from an alternative universe, and IRIX has lots of off-the-shelf software like Maya available, unlike the other Unixes that were mostly used for internal number-crunching and database stuff and don’t have lots off-the-shelf software available. It’s a bit sad that Solaris made the jump to x86 but IRIX stayed on expensive MIPS hardware that’s getting rarer by the year.

In general, the kind of person = In general, it boils down to the fact that the kind of person

(sorry)

Yes like most things there are various stages:

1) full price supported product from the vendor, easily obtained if you have the money.

2) vendor loses interest, most customers no longer want the kit and offload it cheap/free, most ends up in landfill while a small amount is snapped up by enthusiasts or those looking to hoard for future resale.

3) only a tiny niche market remains – enthusiasts and a tiny percentage of original customers who are locked in to the equipment, meanwhile existing hardware starts to fail due to age.. those customers who are locked in have no choice but to pay whatever someone demands.

I have a garage full of old equipment, some of it nodoubt doesn’t work any more – for instance i recently tried powering on an SGI Octane only to have something pop in the power supply. I bought most of this stuff quite cheaply at stage 2 above so it would probably sell for more than i paid – if still working at least.

MIPS hardware is still being made, and IRIX source code has been leaked a few years back… I wonder how much effort it would be to port IRIX to run on a current MIPS device. At least running headless should be relatively easy, and once you had the kernel booting the userland should work ok given the compatible CPU.

That’s an unusual thing to happen to computer hardware while in storage. My guess is your hardware was stored in a place that exposed it to extreme temperature swings (which damaged it due to the contraction and expansion of materials) or more likely condensation formed inside the machine. A common trick in such cases is to take the machine to your living space and let it stand for a day for condensation to evaporate. Then leave it plugged in without turning it on for one more day for residual electric current to remove the remaining condensation. Or avoid storing your machine to the conditions that cause it if possible.

I store all my electronics in living space, which means the only problem I have to worry about is batteries going flat (batteries lose a little bit of charge when in storage, and if they go below a certain voltage, they will not charge again, and if they go to actual 0 volts, they will start swelling and destroy the hardware too). So, it’s a good idea to charge your batteries to 80% once every 6 months (you can also charge them to 100% but it may cause a capacity penalty). But I never had issues with the actual electronics.

kurkosdr,

I’m sure your right that heat & cold isn’t great for electronics in an attic or garage, or even in a rented storage facility. But climate controlled storage seems like a luxury that most people can’t afford. No? I don’t have the luxury anyway. I do wish I had the space. Incidentally, my wife wants me to give up my workspace to give the kids their own bedroom and I’ll have to move back into an unheated garage. It gives me anxiety just thinking about it, haha.

Don’t forget about a huge user of these legacy systems: the US government. If it’s still running, then why spend taxpayer dollars? Oh wait, it stopped running? Congress will approve capital upgrades in 5-7 business years, as long as they get matching funds for [highway construction, etc] in their home districts. Supporting a cottage industry of US-based computer repair shops who scour ebay etc for a markup to maintain current functionally in the interim is just the cost of upkeep.

Okay, here’s my 2 cents on this. Back in the late 1990’s, early 2000’s, I distinctly remember (and we all know memory is crap – right?) that everyone believed IA64 and Windows would dominate the world. I even remember talking to someone at Sun about the fact they’d ported Solaris to IA64 because it was just … inevitable. In the era of x86 being decidedly 32 bits because Intel said so, System 7.5/8, and Windows NT 4, I developed an interest and appreciation for Linux. It gave me the server experience … sort of. You had to squint a little – but not much.

What if I wanted a Solaris workstation to develop on? Well, there’s no ‘price tag’ on that. A sales person has to get compensated (and as a former Sun reseller – I can vouch for this). So the customer has to call. How many workstations? What price breaks? Does the customer already have a contract? How about support? And software? And support on that software? For 1 year? For 2 years? How about training? Do you need an install on site? What tier of on-site support? The total price comes to $XY,YYYY.ZZ. Somewhere in there is a line item for a Sparc Ultra 5 and a copy of the Sun compilers, but the pricing is tied up in all the other things ordered so it’s not really a price. And no sales person really wants to deal with 1 workstation (until things get very, very bad).

In the early-mid 1990’s, if any one of the vendors ever got their s**t together, I think they could have eaten NT’s lunch. But, they were never going to do that because they’d spent the 80’s cutting each other to shreds. And being competitive would probably mean putting a sticker price like 1,999 on a base model and no sales person would really get compensated in the same way. That would mean sales people lose interest and sell only things that make them money. And they stop selling your workstations. (To be fair, Sun did this later on – but at the worst possible price you could pay for a machine.). It seemed that by 1998/1999 the writing was on the wall. You could get a bare bones Solaris 2-socket server for somewhere around 30 grand. You could get a dual socket server (especially white-box) for about 3,000. I can buy 10 of those for the price of 1 Sun box and let’s say 1/2 the performance. (Let’s give the Unix systems the benefit of the doubt). And, as a startup or solo ISV, I don’t have to deal with the sales bullsh*t that makes me want to claw my eyes out.

Couple all of this with managers willing to buy you a much cheaper windows machine (NT is 32 bits, right – quit whining). And very parsimoniously fork over for 1 license of Visual Studio. Can’t you just install from one of the other licenses from one of the other engineers? No? Maybe someone running Autocad needs that big HP – most people don’t. Besides, the roadmap laid out by our CTO sees NT taking over. So you won’t have those porting to HP/UX problems for long, because HP will just run NT. So read your Win32 Bible, read up on COM programming, and study C++ because that’s going to be the next 20 years. No, seriously, don’t focus on Java or Linux – those aren’t for serious development – our CTO has the roadmap they got from Gartner or whatever.

So there’s no interest in preserving any of this from Sun or (I throw up a little in my mouth when ever I say this – Oracle), HP, IBM, or whoever. They don’t want to, in any way, jeopardize any deal or sale that is “retire HP/UX and move to Linux – because that’s what our CTO says is the future.” Because you don’t piss off the sales people by telling them that “history buffs” can get HP/UX for free. Because they’re currently working on a $XX,XXX,XXX deal to retire some HP/UX systems rotting in a government basement. And if they get new licenses….

Yes, the age of $50K+ workstations is dead. Because, generally speaking, you’ll do more and get more out of a $500 Linux PC today.

But still, if you ever used Apollo Aegis or DomainOS, you know there was some pretty cool tech there that HP chose not to pursue. Which is sad.

The idea of running Interleaf 4/5 transparently off the disk of a different host by merely changing an environment variable…. there’s no equivalent of this today. And that was all done at crap token ring speeds.

I used to work at HP, back in the day. My personal favorite was the HP 9000/735, a blazing 125 MHz monster.

Nostalgia and history aside, I don’t honestly see the point. GNU and Linux today is much better and more capable operating system than HP-UX ever was. x86 systems are much better hardware than even the best PA-RISC/MIPS/PowerPC/Sparc systems of the day. My guess is you could write an instruction level PA-RISC emulator, run that on a run of the mill desktop PC, and have better performance than the B-class (trying to remember the code name, was “Raven” the B-class or C-class?) you resurrected. And I don’t even want to think about how much faster graphics cards are today than the $100,000 graphics subsystems we used to sell.

I wouldn’t think of trying to talk you out of your hobby. I like woodworking using hand tools, regardless that my router can make a better dovetail than I can by hand. But I wouldn’t get all misty-eyed about it. There’s a reason the killer micros wiped out the RISC workstation architectures and why Linux crushed proprietary Unixes.

Check this out:

https://forums.irixnet.org/thread-1289.html

Post #5 is what you need for SoftWindows.

If it fails, try adding this as a first line:

SERVER 127.0.0.1 ANY

It’s the attitude of commercial vendors as a whole. If they can’t sell something for a decent enough (for them) profit, then they would rather lock it away so that noone can have it at all.

I’ve always considered any license enforcement code to be a denial of service vulnerability that needs to be patched.

I must admit, I worked for one of the software vendors for HP-UX, with our own custom licensing nonsense. As an intern, I was powerless to persuade them to do anything about it. I have a copy of our software they released for windows, but alas they shut down the validation server for licensing. They still sell it today for modern Linux/Windows but at insane prices, that I can’t justify for my own uses. No one seems to care about the really interesting but not popular software packages., I know maybe because they weren’t popular. Still sad.

In the nothernmost province of sweden there is a small town called Piteå (second largest in the province, but still tiny), and outside that town lives a guy that has just about all any every UNIX workstation in his enormous storage facilities. I have been allowed to visit once, and there were shelves upon shelves with colourful SGI machines galore. he also has all mac types, afaik all SPARC machines that came as a workstation. He also have some Mini computers.

Piteå is not far from where I live (I’m farther up north). Is this for real?

Absolutely, but as i said i do not think he lets anyone in to take a “walkabout” among his stuff. The village is a few km north-east from central piteå along the coast.

Vendor lockin is for the birds. It’s games like this which is why open source spread so widely. It reduces the risk of the vendor getting froggy/acquired/dead and leaving people high and dry. With open source, at least there is the option for people to pick up the abandoned software.

HP is a crap company, and they have everything, somewhere. You’re just not in a position to sign a multi-million dollar support contract to get HP’s attention. This is the company which was going to charge for firmware updates after all.

I’m going to say, the most accurate reproduction of a vintage Unix workstation today is the RPi 400.

– [x] Slow multi-core processor.

– [x] Limited RAM, but massive compared it’s peers.

– [x] Poor IO, but at least as fast as the SCSI drives of the time.

– [x] Video good for basic 2d, but gets stressed with 3d.

– [x] Hardware requires questionable proprietary drivers and firmware to work.

– [x] Ostensibly an open platform based on a familiar OS, but the vendor provided OS injected with secret preservatives and additives works the best.

– [x] Obscure license required to unlock hardware functionality which should be a basic feature (Encryption hardware).

– [x] Chips made by an arrogant hardware company that might actively hate its customers (Broadcom, maybe RPi too after last weeks flameout).

– [x] Proprietary form factor that looks good, but makes upgrading difficult to impossible.

The best modern Unix workstation: A NUC with Fedora.

The best modern retro Unix workstation: Dell Optiplex (SFF/Micro) with OpenBSD.

Feel free to debate those last points. I could be convinced to swap OpenBSD for NetBSD, Slackware, Arch Linux, or Void Linux.

Personally I don’t care much about old, slow, limited systems. Anyway yu’re probably right, but Arch might work also for a modern Unix workstation. Also a NUC or Dell don’t compare well to a SGI piece of art. In fact, I believe the main reason that people still love these old machines is the looks…

https://upload.wikimedia.org/wikipedia/commons/thumb/9/95/SGI_Fuel_workstations.jpg/800px-SGI_Fuel_workstations.jpg

Slow, limited systems are a fun challenge. Not for work, but for personal stuff. It’s easy to hit the limits, and the software requires optimization and tuning.

I thought about Arch or EndeavorOS over Fedora. I haven’t run them day to day though.

Arch does provide a much cleaner base system, and it’s something I’ve been exploring for containers.

If we really thought about it, something with a Xeon and RHEL would be more exact.

SGI did make some pretty cases. Pure ’90s. I do remember some ’90s Acers which would compete with the SGI as an art piece. 🙂

I’m a pizza box fan, so NeXTstation, SPARCstation for me. 🙂

https://blog.pizzabox.computer/pizzaboxes/sparcstation20/

https://blog.pizzabox.computer/pizzaboxes/nextstation/

NUCs and Dells are the best approximation of a modern pizza box, I’ve found. Mac Minis are up there, but much more limited then the other options.

Going custom opens up a world of cases. Everything is kind of limited to the tower form factor, but there are some cases out there which SGI would be proud of.

Really beautiful those cases. A lot of design and love was going in those products, when they were still special. I miss the ’90s 😉

If you have some applications running on SPARC that cannot be migrated to another platform, Stromasys has a SPARC emulator on X64 that works great, It emulates the SPARC processors, your software is untouched.

https://www.stromasys.com/solution/charon-ssp

Configure, copy your current SPARC boot disk over to the Charon-SSP host, boot.

An interesting feature is that X64 processors typically run faster than the SPARC processors, and with maximizing memory and high-speed disks your old application will run much faster!

I remember using Alpha workstations back when I was at university in Aberystwyth in the mid 90s. They were lovely graphics workstations, very smooth, although the only software I had access to was the web browser, but it was nice to see more text on a long window than you could squeeze onto a PC monitor. (They also had two rooms full of Sun machines, but only CompSci students were allowed to use them.) A few years and PC-based Linux desktops later, I got hold of a Sun Ultra 5, I think it was, on eBay after seeing a letter in Linux Format about them and got the free download of Solaris (not sure if 9 or 10). Couldn’t believe how difficult it was to install third-party software and how clunky the download tools were. Linux OSs had proper package management (usually APT at that time) and Sun hadn’t bothered to copy it despite it being free to use and copy. And the desktop was pretty ugly. It ended up as a rest for my old eMac, which didn’t have a stand and was a bit low on my desk.

BTW I do find the whole “digital heritage” angle in the article a bit unrealistic. Companies only care about their heritage if it brings them money, aka if there is a big enough crowd who will pay to experience that heritage. Otherwise, corporate heritage is a money pit that puts HP at a competitive disadvantage to much newer companies that don’t have such heritage.

The real problem here is online DRM. Online DRM is simply evil, since it can make purchased goods disappear before your eyes. At least with offline DRM like PS2 discs or SecuROM CD-ROMs, if you own a copy of the product and the relevant period-correct hardware, you still own the thing, with online DRM your ownership can vanish before your eyes. And yes I consider phone activation to be online DRM too, since it can also be taken away.

IRIX is what go me down the path of cyber security. SGI’s O2 workstations shipped with IRIX 6.3 that included telnetd and an “lpr” account that had no password. Companies started hanging these “tiny” servers on public IP addresses with no OS hardening and you could just telnet into them and have a look around. We were already running NetBSD and OpenBSD on beige box PCs so we were early adopters of Linux and BSD ports to RISC architectures because they were more secure by default than most commercial UNIX releases. But when we had to build production firewalls that could have support contract we chose Sun pizza boxes (Ultra class) because we could install Checkpoint on them. At home, I was running Debian and OpenBSD on SPARC and UltraSPARC machines because they were simply better operating systems with more software available even then. So I don’t really understand the fascination with running AIX, HP-UX, and Solaris on old architectures… they really only shine on modern systems like what’s left of Oracle SPARC hardware or some of IBM’s latest gear and even then you’d have to have a viable use case to make it worth the extra money.

Of course PTC would refuse to help you. I mean, their primary software “Creo” is user-hostile to begin with.

Who else designs a software where you have to long-press the right mouse button to perform a standard right-click operation? Or how about some commands being connected to “triple click middle mouse button” ?

No. I’m not joking. This is PTC in a nutshell.

I loved the article, sharing my passion for UNIX/RISC workstations.

One correction, though: the strongest going UNIX is AIX.

IBM still actively sells and supports it (even with much less focus than a decade ago), and the CPU architecture (Power) is still being developed, with new models released regularly.

Thom mentions that his system feels faster than what might be expected from clock speed. This definitely is true with the SGI systems I have. A big part of this is the very low user interface latency engineered into these old workstations. When I open a terminal window, it *snaps* open, even on relatively low end Indys.

Here is an interesting post that discusses latency on some of the older systems: https://danluu.com/input-lag/

josehill,

Very informative link, thanks for posting! For all the gains we’ve made, it’s often accompanied by regressions with bloat and complexity.